By Doug Ward

A colleague’s daughter recently finished her first year of college. In high school, he said, she had never really had to study to get good grades. In college, though, she had to adjust her study habits and her thinking after her early grades dipped below the A’s and B’s she had routinely – and easily – received.

That sort of dip in grades is common among traditional freshmen as they learn to live away from home for the first time, deal with the liberation and temptations of personal independence, and try to make sense of the academic expectations of college. How they deal with that jolt can affect everything from their choice of majors to their ability to stay in college.

Jennifer Meta Robinson, an anthropology professor at Indiana University-Bloomington, has been studying this phenomenon, which she calls “grade surprise.” Students usually have a good sense of the grades they will receive on assignments or exams, or in a class. When that expectation doesn’t match reality, though, they experience grade surprise.

Robinson explained her research to the steering committee of the Bay View Alliance earlier this month in Bloomington, Indiana. Both Indiana and KU are members of the Bay View Alliance, a consortium of 10 research-intensive universities that are working to elevate teaching and improve learning among students. Robinson and colleagues in chemistry, computer science and informatics recently received a mini-grant from the Association of American Universities to continue their work of surveying students and analyzing university data to try to find questions they have about grade surprise among students:

- How does grade surprise affect retention in various majors?

- Does the power of grade surprise grow as students move through additional classes?

- What approaches help students recover when they encounter grade surprise?

Robinson’s hypothesis is that grade surprise impedes student progress but can be mitigated. When students are overconfident, she said, failure is more painful than when they have low expectations about their grades.

“Surprise creates pain,” Robinson said.

She is also looking at the flip side of that: whether there is positive grade surprise.

“There’s a human tendency to rewrite the past,” she said. “We mitigate our pain by retelling our story in a way that makes it less surprising.”

For instance, students might tell themselves that a low grade was the instructor’s fault or that people like them just don’t do well with this type of material or in these types of classes. That type of thinking can easily push students out of classes or majors.

Interestingly, few students seem to blame instructors when grades come in lower than expected.

“We were surprised at how few students said, ‘The teacher had it in for me,’” Robinson said. “Or, ‘This was out of left field. I studied this other thing and it wasn’t on the test.’ There was very little of that. It really was about more about what I can do, what I practice, where I can spend more time. The locus of control was within.”

Disparities in distribution and reaction

Grade surprise isn’t equally distributed, Robinson said. Underrepresented minority students and first-generation students are more likely to be surprised by their grades. And women feel more disappointment when they receive lower grades.

Robinson and her colleagues have been sharing context about grades to try to ease some of the pain of grade surprise. For instance, in computer science and informatics classes at Indiana, women generally receive higher grades than men. In chemistry, women and men receive similar grades, although all receive lower grades than they did in high school.

“So women may feel that more, that disappointment in themselves, that setback of, ‘Oh, maybe I don’t belong,’” Robinson said. “But that’s where we could say to them that they may be processing this differently but the GPA facts of it are that they are doing the same.”

An analysis of data at Indiana shows that many students bounce back after the shock of an initial grade. They expect an A, receive a C but then eventually get an A in the course. Robinson and her colleagues want to better understand what students do to recover. They are also looking at the mindset of students who think they did poorly on, say, a midterm exam but actually did well. What happens if they enroll for the subsequent semester before they know their grade?

“What is that little detour through the course?” she asked. “How long does that hang in the air that you think you’ve bombed but you get that assignment back and got that A after all?”

A move toward wider use of data

Robinson describes the grade surprise project as one of many that “connect classes to the potential of big data.” Indiana has an ambitious program in helping faculty members combine university demographic data with data about student performance in classes. That combination is often referred to as learning analytics. The Indiana program, known as Learning Analytics Fellows, has led to more than 50 projects since it started in 2015. It is run through a recently created Center for Learning Analytics and Student Success.

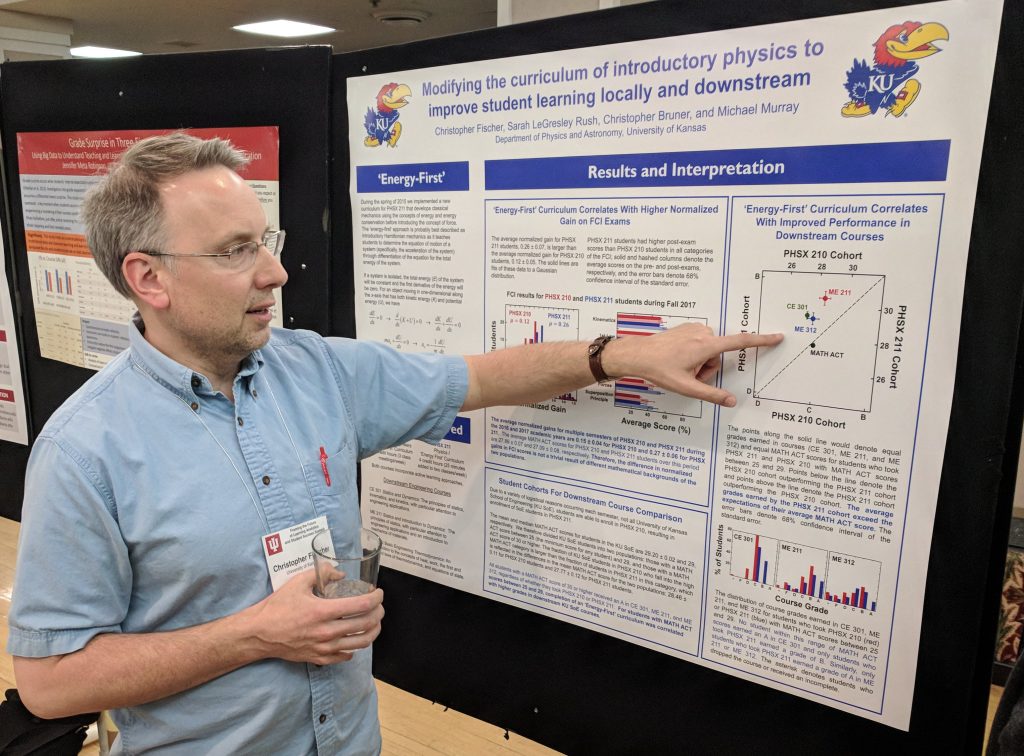

We have been working on a similar project at KU, though at a smaller scale. An AAU mini-grant through the Center for Teaching Excellence has allowed several STEM departments to use university data to learn more about their students and about the paths they take through various curricula. The recently created Office of Analytics and Institutional Research (formerly the Office of Institutional Research and Planning) has continued the momentum around wider application of university data. One of its divisions focuses on academic data analytics and is looking at ways of making more data available to faculty members.

These types of data project allow instructors and departments to ask new questions about curricula, with an eye toward improving student retention and graduation rates. As Robinson explained in her talk at Indiana, this use of data is driving culture change as universities find ways to scale learning analytics even as they explore the potential of data in education. Robinson emphasized the importance of providing context for data and of applying “interpretive muscle” to tease out insights.

“These are drivers for change at all of our universities,” she said.

Doug Ward is the associate director of the Center for Teaching Excellence and an associate professor of journalism. You can follow him on Twitter @kuediting.

Recent Comments